Greetings,

in the past three years, I have used Fortran extensively in my research, particularly, in engineering geodesy and automated deformation monitoring. Following a prototype in Python, the Deformation Monitoring Package (DMPACK) is both a software library and a collection of programs for sensor control and time series processing in geodesy and geotechnics, written in Fortran 2018.

While still being in development, I’d like to present this project as an example of general-purpose programming in modern Fortran. Essentially, DMPACK is a collection of codes to build autonomous sensor networks in the so called “Internet of Things”, and to monitor terrain, slopes, construction sites, or objects like bridges, tunnels, dams, heritage buildings, and so on, by analysing time series collected from geodetic and geotechnical sensors (like, total station, GNSS, MEMS, inclinometer, RTD, …).

The package seeks to cover common tasks encountered in contemporary deformation monitoring, and it already features:

- sensor control (USB, RS-232/422/485, 1-Wire, file system, sub-process),

- sensor data parsing and processing,

- database access and synchronisation,

- data serialisation (ASCII, CSV, JSON, JSON Lines, Fortran Namelist),

- inter-process communication and message passing,

- remote procedure calls via HTTP-RPC API,

- distributed logging,

- Lua scripting,

- time series plotting and report generation,

- Atom web feeds for log messages (XML/XSLT),

- web user interface,

- MQTT connectivity,

- e-mail.

At the current stage, DMPACK consists of 74 modules, 22 programs, and 32 test programs. The whole source tree, including the required ISO_C_BINDING interface modules solely written for this project (to POSIX, SQLite 3, libcurl, PCRE2, Lua 5.4, zlib), counts > 34,000 SLOC in Fortran, spread over 160 source files.

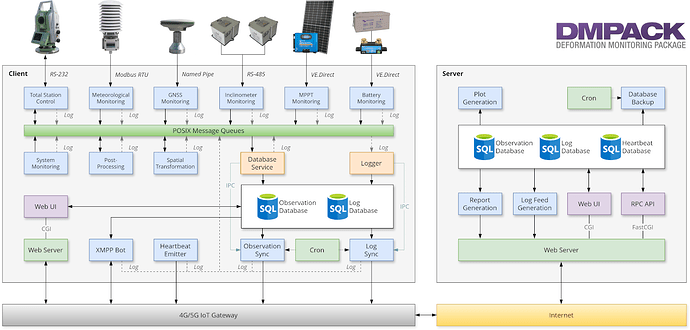

The schema of the DMPACK client–server architecture:

I admit, the package is not incredibly interesting for the non-geodetic audience, but let me give you a brief overview of two aspects that might be worthwhile for other Fortran coders.

HTTP-RPC API

Fortran is not only used to communicate with sensors and actors, but also for HTTP-based Remote Procedure Calls (RPCs) between client and server, for instance, to collect time series and log messages from remote sensor nodes. My approach is nothing special and widely used elsewhere, nevertheless, it works reliable, even in Fortran:

-

Let’s say, an arbitrary sensor is connected through RS-232. On Unix, the serial port is mapped to one of the TTY devices, such as

/dev/ttyS0. The sensor control program of DMPACK sends requests periodically to the sensor, reads the sensor’s responses, and extracts the measurement values from each raw response using the passed regular expression pattern (PCRE2). -

The observation data is forwarded via a POSIX messages queue to DMPACK’s database program which will store it in a local SQLite database (after validation).

-

The sync program synchronises the local database with a remote server by using a simple RPC mechanism:

-

An observation is read from database into a Fortran derived type. The derived type is serialised to Fortran 95 Namelist format.

-

The character string of the Namelist representation is deflate compressed (zlib) and sent to the remote server via HTTP POST (libcurl). Compression is optional, but reduces the payload size from around 40 KiB to less than 4 KiB in the best case, and allows a transmission rate of up to 50 observations per second (locally). But there is still some room for optimisations (maybe, by using a binary IDL format, concurrency, HTTP/2 or WebSockets).

-

Authentication is provided by the web server (HTTP Basic Auth), which is sufficient in this scenario. The authentication backend can be easily upgraded to LDAP if required. The HTTP-API does some basic authorisation, too. All connections are generally TLS-encrypted.

-

The RPC server, also written in Fortran, and running behind FastCGI, accepts the request, inflates the compressed data (zlib again), reads the Namelist into a derived type, and, after validation, stores the observation in the server database.

-

If no error occured, the client program marks the observation as synchronised, and repeats the above steps for all observations that have not been successfully transmitted yet.

-

Additionally, the time series stored in the server database can be accessed through the same HTTP-RPC API. Authenticated users may download observations or logs from arbitrary sensor nodes in CSV, JSON, or JSON Lines format.

Web UI

One of the problems I’ve stumbled upon is the question of how to provide a graphical user interface to configure servers and sensor nodes, and to display time series, logs, and handshake messages. As we all know, GUIs are not a strength of the Fortran eco-system.

As the sensor nodes are usually deployed in remote locations, some kind of web interface seems to be preferable. (The Tk toolkit would be another decent fit, but running X11 on an “embedded device” + using session forwarding is certainly a little to heavy for those little machines – not taking into account the reality of poor mobile internet connections and high latency.)

For me, the implementation of a user interface is more of a burden than strictly necessary. I would be fine with a text-based UI over SSH, but that won’t win any prizes nowadays. At the end, I’ve just put some constraints on my approach:

-

Cross-Environment: The web-based user interface must be able to run on (embedded) sensor nodes and servers alike, as both connect to the same database backend. And I’m not going to write two damn web applications.

-

No JavaScript: Not just because it would mean introducing another programming language, but also because text-based browsers (such as elinks or w3m) do not have support for JS in general, and I’d like to access the web interface within an SSH session just in case. And without JS, there is no AJAX, no REST, no SPA cretinism. Certainly, it keeps the architecture simple.

-

Semantic Web: Straight forward and mostly

class-less HTML5, a single CSS file, no sophisticated web design. I’ve settled for classless.css which covers all the HTML elements I need (I really appreciate it when people more talented with all this web clobber share their work under a permissive licence). -

Code Reuse: Most of the required functionality is already covered by the DMPACK library in one way or another. Using any other language than Fortran would force me to re-implement large portions of the code base, or to provide an additional C API (just the database abstraction layer over SQLite is > 4,000 SLOC in Fortran …). I guess, the most sensible decision here is therefore to just stick with Fortran and accept the limitations.

The skills needed to write web applications in Fortran are probably not in high demand by today’s software industry. However, that does not imply it would be impossible or nonsensical. The Common Gateway Interface (CGI) standard is around for decades (the first draft specification was published in 1993) – and still works flawlessly in “modern” web environments. The protocol is trivially simple, which is why we can write CGI applications in nearly every (serious) programming language.

I’m quite an adherer of CGI and it’s definitely a choice that gets results displayed very quickly if you cannot or don’t want to use one of the “real” web frameworks the cool kids on that Orange Site are talking about, but polishing the small details can turn quite cumbersome in Fortran, and the modest requirements of CGI come at a cost:

-

Each request starts a new process, i.e., some CPU load can be expected, but we’re not talking about 10,000 users per hour here. Another disadvantage is the start-up time of a CGI application in contrast to the persistent processes of FastCGI/SCGI. But modern operating systems are not stupid, and continuous program invocations are usually cached.

-

Traditionally, CGI demands one application per route/endpoint, but we can overcome this limitation by implementing a basic URL router (how hard can that be in Fortran?).

-

Templating is rather ugly in Fortran, especially, without a proper templating engine. I’ve settled for hard-coded HTML markup and some string templating, hidden behind library procedures that return formatted and encoded strings (I hope that won’t hurt my street credibility too much).

So, my approach is rather old-school, and, I can imagine, seen as legacy, but again, the goal is just to provide some kind of database gateway to display time series and graphs that is guaranteed to work in 15 or 20 years from now (try that with React!).

The result is a classic CGI program, executed by lighttpd, that consumes GET and POST requests, does some basic routing, and returns HTML5. The performance is okayish, I guess?: an HTTP response from the DMPACK web UI usually takes around 50 msecs (remotely deployed; locally it’s < 20 msecs). When plotting a graph of 1,000 observations, the response time increases to 150 msecs (< 50 msecs locally). I don’t know how long modern web applications take to render, but these results are acceptable by my standards.

By the way, it just requires some Unix IPC magic to create decent looking graphs for the web in Fortran:

-

The web interface provides a simple HTML form for the user to select sensor node, sensor, time range of the observations to plot, and so on. The form data is then submitted to the CGI application via HTTP POST.

-

After form validation, the Fortran program fetches the time series from database, opens Gnuplot in a new process, connects stdin and stdout of the process with pipes, and finally writes plot commands and time series to Gnuplot’s stdin.

-

Gnuplot returns the plot in SVG format through stdout. The XML is read from the pipe, Base64-encoded, and pasted as a

data-uridirectly into thesrcattribute of the HTML<img>tag. The HTML page, including the encoded SVG, is then returned as a single response to the client. If activated, the web server additionally compresses the response (gzip).

For a few thousand observations, SVG is an appropriate choice. Since the SVG format just contains XML-encoded primitives, the size of an SVG file grows linearly with every additional data point. Alternatively, we could use one of Gnuplot’s raster image terminals instead (like, PNG), but these depend on Xlib and some other dependencies (for which I don’t have the space for on embedded sensor nodes), while SVG is solely text-based.

Conclusion

So, was it worth to undertake this endeavour in Fortran? Well, you know the old saying: we do this not because it is easy, but because we thought it would be easy. The software is quite an improvement in comparison to the Python prototype, but that was to be expected.

POSIX system calls (serial comm., date and time functions, IPC, message passing, …) are obviously MUCH easier in C/C++ or through some abstraction layer. Getting this right took many weeks. And with the new JSON capabilities of SQLite, the bulky derived-type-to-JSON converters in Fortran were superfluous in retrospect.

Hopefully, Fortran will excel once I dive deeper into the time series analysis part of my project (that was the original intention to use Fortran in the first place). For now, I’m satisfied with the outcome, even if an implementation in C + Tcl would have saved me at least a year of development time that was required to write the necessary interface bindings for Fortran. But here we are.

Cheers!