Sorry, I’m just not clear on what the Fortran program does. I think it would be best if you posted the Fortran program. I thought what I shared above matched your Julia program.

mmm I see.

(Reading up) So I guess my fortran version is quite a bit faster than the Julia code then if I beat it even with the f2py penalty…

I didn’t use unsafe math or MKL options to treat denormal floats faster because in my experience those exponential / trig functions is exactly where the safe math is important.

my code is here btw

I personally would very happily jump ship if a modern statically compiled language with minimal runtime (like rust) had facilities for linear algebra as ergonomic as those of Fortran but also pattern matching, decent build tools etc. I’m not aware of any such language though.

Julia is a weird mix. It is mostly a scripting language (no AOT compilation, REPL based workflow). It can be really performant but also really not performant. It doesn’t export C functions AFAIK. So it’s really competing with Python, not Fortran.

I think Fortran’s lunch is really being eaten by C++, sadly enough.

I think first Julia will smash Matlab. Then time will tell.

For GPU programming, Julia is already able to output static compiles for some code (no allocation, no dispatch). Getting good static compiles is also possible for a lot of julia code, and is being actively pursued for building libraries. I agree that much of Julia’s competition is matlab, python and R, but there are also projects like CLIMA.jl and Ocatavian.jl that are full Julia climate models and (in progress) BLAS replacements, which fall more in the Fortran area. The other important thing is that much of the Fortran that is in use is to support slower languages like python/matlab. If Julia starts replacing those, then Fortran will have market taken away.

Hello everyone. I’m the author of the original post.

Sorry to jump into the Fortran forum in this way – as I’m new to blogging I am not entirely familiar with the online etiquette that is required to discuss some matters that can be the subject of online holy wars. I never intended to do that! I blog just to document and share my personal experience, in this case, on how I was very positively surprised by this new Julia language, coming from a Fortran + Python experience. I really appreciate all the feedback that I’m getting here and from the Python camp as well ;-). I am still editing those posts, by the way, and I plan to incorporate as much feedback as possible there.

There are many reasons for calling Fortran from Python, such as being able to access the Python ecosystem of I/O libraries, web and user interfaces, the ease of distribution of code, and so on. So dropping this kind of set-up for a standalone Fortran one looks unreasonable for my use case.

I was suspecting that F2PY was probably introducing some overhead, but somehow I was convinced otherwise. For example, Numpy has now support for row-major ordering, and F2py is supposed to be an automatic way of writing C-wrappers that in turn employ iso_c_bindings on the Fortran side. So, particularly for some simple tests such as this one (where there is very little communication between languages), I don’t see the reason for this overhead. I now do see that there is one, though, thanks a lot for pointing that out! But I don’t understand why there should be one.

As for how this overhead affects the overall results of my test, at least on my computer, I am still getting better timings on my original Julia code than in the standalone Fortran.

This performance difference looks to be mainly because of two reasons: the first is that Julia has faster trig functions than out-of-the-box gfortran (I have been told Julia is not relying on MKL, btw), and then there is the effect of hyperthreading having on impact on the Julia code and not in the OpenMP.

I know one can go with some of Intel’s products to improve performance. From time to time Intel will even make ifort free for personal use (I remember I had a free license that got dropped at one point). The current licensing terms do contain a caveat, as there is the need to purchase a “concurrent license” for some use cases – which seem to be related to cloud usage of some sort.

MKL’s licensing terms seem to be quite permissive, but I do remember running into problems when trying to share my Fortran code with other people (lazy people who couldn’t compile my code anymore because of that dependency, so I had to build a binary for them). When sharing Fortran code which is part of a Python package, potential not-so-technical users can go with “pip install” and then end up having something which perfectly integrates with their workflow, which is a big plus.

It’s the first time I see something like what Clavigne has put out, combining F2PY + MKL with so much ease. It does make the sytax a little bit more cumbersome than classic Fortran, tough.

Anyway, I’m really happy to see that there is an open-source Fortran community now. There wasn’t one when I started using Fortran. As I said, I’ll do my best to incorporate all of the much-appreciated feedback. Python, Fortran, and C++ have been my favourite tools so far, I’m just really enthusiastic about my latest finding.

Best,

@martin.d.maas thank you for your post and welcome to the Forum!

At least from our (Fortran) side, I don’t think we see this as a holy war. I personally like such benchmarks (and I know others too), and we even have a repository for such comparisons: GitHub - fortran-lang/benchmarks: Fortran benchmarks, but didn’t have time to actually work on it. The benchmarks you used would be a good one.

Hello, certik! Nice to hear that.

Someone just shared some cython code with me and suggested that numba would be a natural addition as well. I’ll try to polish things up a little bit, and I guess I could wrap all the tests into a python driver that could be used in the Fortran-benchmarks repository.

@martin.d.maas do you need someone to write the Numba version? I have extensive experience with it for exactly that kind of problems.

Surely someone has already spotted that M is a symmetric matrix and therefore only one triangle needs to be calculated and stored.

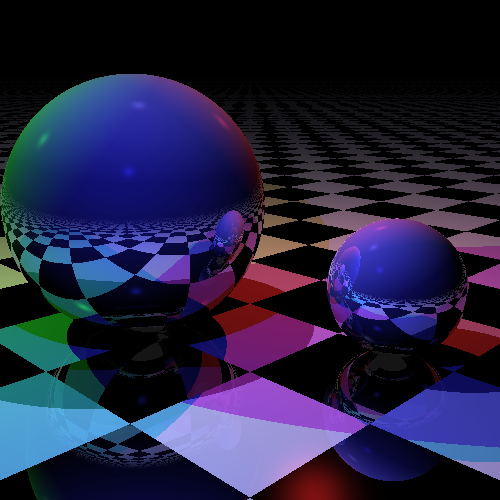

For the ones that like to be nerd-picked, this is a nice benchmark project: GitHub - edin/raytracer: Performance comparison of various compilers

I think it is as close one can get to a real application as a benchmark. And, cool as it is (it even produces a beautiful picture, below), it shows how meaningless these benchmarks can be. We have explored this code, because the Julia benchmark was very poor as reported, and we found that:

- First, the reported time for Julia is simply to high. In practice, for example, for the codes that are posted there, in my computer Julia runs in ~150ms (and Fortran in ~90ms) .

But this was the most unfortunate discovery:

- The example renders a scene with 3 objects. If one increases the number of objects, the relative times between the codes change quite significantly.

Thus, even in a project as interesting and realistic as that, it shows benchmarking codes that differ in less than 2x speed can be quite meaningless, unless if considering comprehensive test cases. One can spend hours trying to optimize something to get a speedup for one specific case of a problem and loose the perspective of the generality of the benchmark.

I did and tested it. It saves half the time of course, I just didn’t want to bias the result by using a different algo.

It’s also quite a bit faster if you return real and imaginary parts as two different arrays.

On this particular point, I think ideally it should not be faster: in Fortran the compiler (I think) is free to represent a complex array as either real/imag interleaving, or first all real, then all imag. The second internal representation I think should be as fast as returning real and imag parts as two different arrays.

I stand corrected. Couldn’t a pointer work just like array sections (slicing)? I.e., the internal array descriptor would have separate pointers to real and imaginary parts.

From performance perspective, I think it is generally faster to separate the real and imaginary parts of a complex array. I think it is for this reason that FFT libraries often provide functions that operate on this format.

I did it as an extra dimension yes. Now I don’t remember how much faster it was and I didn’t note it down…

In this case though I’m using VMKL sincos to compute real and imaginary parts of a complex exponential. I could probably make it faster by casting the output complex array cast as double precision array A() and use A(1:) and A(2:) as the cos and sin outputs of sincos with stride 2.

but that’s a bit involved for a toy problem.

@certik, won’t that result in array temporaries in typical use?

use, intrinsic :: iso_c_binding, only : c_loc, c_size_t

complex, allocatable, target :: c(:)

integer(c_size_t) :: addr_c

c%re = [ 1., 2., 3. ]

c%im = 0

addr_c = transfer( source=c_loc(c), mold=addr_c )

print "(g0,z0)", "main: starting address of c: ", addr_c

call sub( c%re )

contains

subroutine sub( a )

real, intent(in), target :: a(:)

integer(c_size_t) :: addr_a

addr_a = transfer( source=c_loc(a), mold=addr_a )

print "(g0,z0)", "sub: starting address of a: ", addr_a

print *, "a = ", a, "; expected is 1.0, 2.0, 3.0"

end subroutine

end

main: starting address of c: 9F3020

sub: starting address of a: 9FA110

a = 1.00000000 2.00000000 3.00000000 ; expected is 1.0, 2.0, 3.0

In case it’s worth noting, Julia doesn’t do this (except if a package like StructOfArrays is used).

Yes, the interoperable descriptor is a different story. There I guess it would have to use extra dimensions as you suggested.

I can’t see how your example would create extra temporaries? If anything, since you are operating on the real part of a complex array, it would not create any temporaries at all.

@certik, then why does the address of the actual argument differ from that of the proxy in the subprogram in the complex case I showed upthread but not with real below:

use, intrinsic :: iso_c_binding, only : c_loc, c_size_t

real, allocatable, target :: c(:)

integer(c_size_t) :: addr_c

c = [ 1., 2., 3. ]

addr_c = transfer( source=c_loc(c), mold=addr_c )

print "(g0,z0)", "main: starting address of c: ", addr_c

call sub( c )

contains

subroutine sub( a )

real, intent(in), target :: a(:)

integer(c_size_t) :: addr_a

addr_a = transfer( source=c_loc(a), mold=addr_a )

print "(g0,z0)", "sub: starting address of a: ", addr_a

print *, "a = ", a, "; expected is 1.0, 2.0, 3.0"

end subroutine

end

main: starting address of c: 9E3020

sub: starting address of a: 9E3020

a = 1.00000000 2.00000000 3.00000000 ; expected is 1.0, 2.0, 3.0

That would be really nice. I tried to use numba only once, and I don’t even have it currently installed in my PC.

edit: oh, somebody had already posted numba code on HN:

@numba.njit(parallel=True, fastmath=True)

def w(M, a):

n = len(a)

for i in numba.prange(n):

for j in range(n):

M[i,j] = np.exp(1j*k * np.sqrt(a[i]**2 + a[j]**2))

and timed it like this:

%%timeit

n = len(a)

M = np.zeros((n,n), dtype=complex)

w(M, a)